Kafka Connect Tutorial

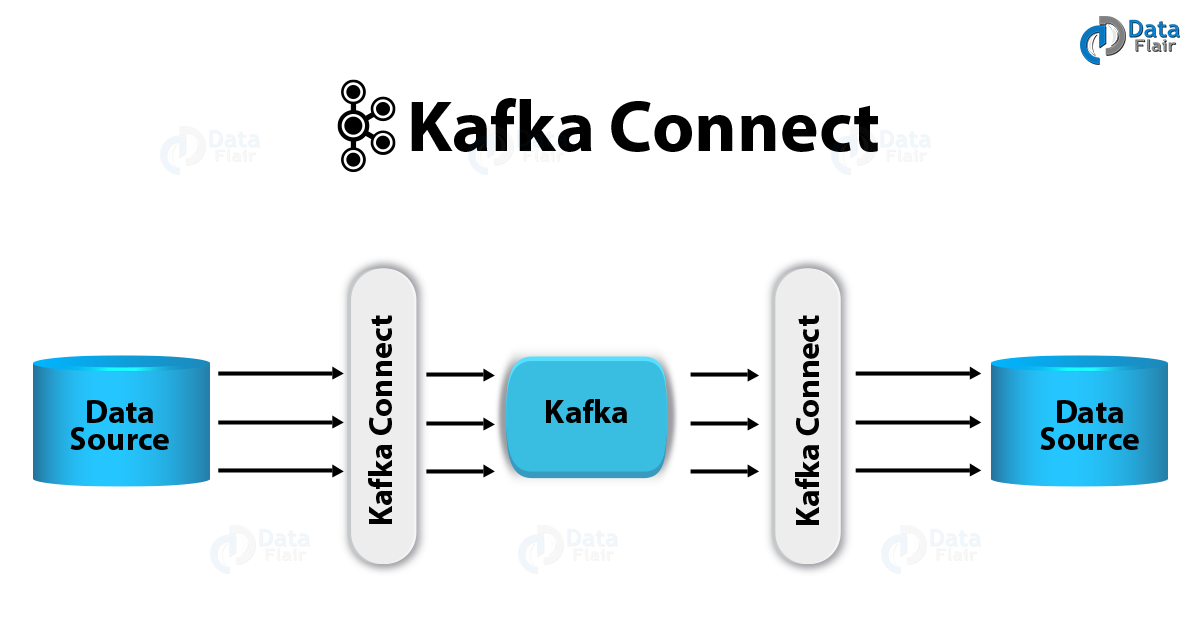

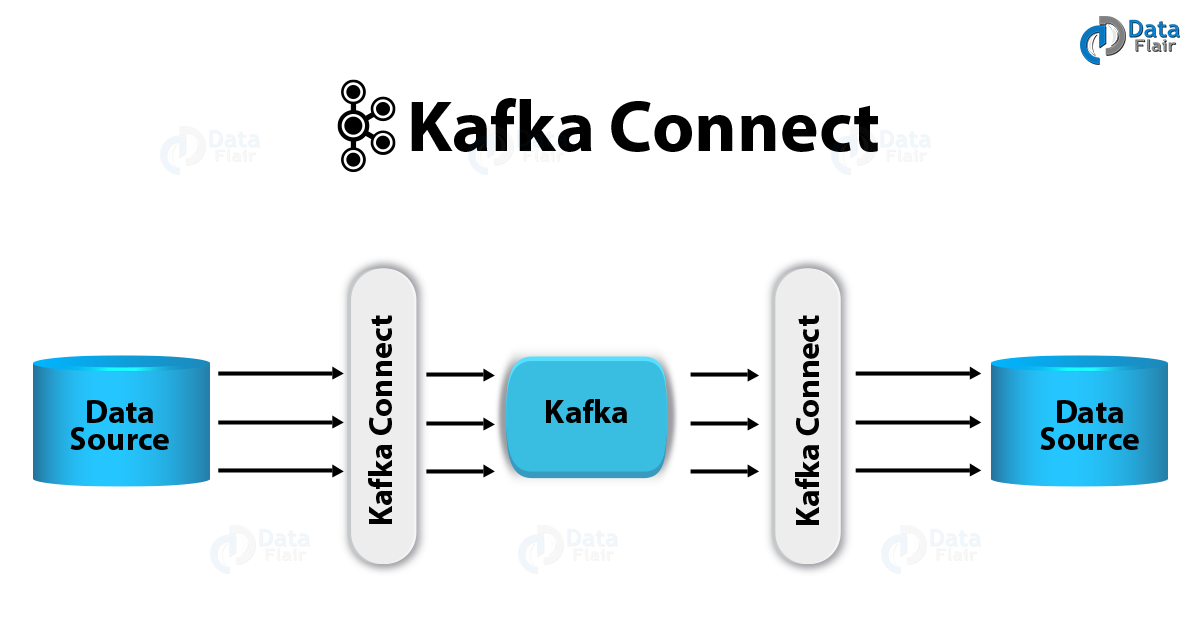

Kafka Connect is a tool to reliably and scalably stream data between Kafka and other systems. To communicate with the Kafka Connect service you can use the curl command to send API requests to port 8083 of the Docker host which you mapped to port 8083 in the connect container when you started Kafka Connect.

Microservices And Kafka Part One Dzone Microservices Event Driven Architecture Architecture Blueprints Blueprints

A document contains the message contents and a schema that describes the data.

Kafka connect tutorial. It offers an API Runtime and REST Service to enable developers to quickly define connectors that move large data sets into and out of Kafka. This tutorial walks you through integrating Kafka Connect with an event hub and deploying basic FileStreamSource and FileStreamSink connectors. Kafka Connect also enables the framework to make guarantees that are difficult to achieve using other frameworks.

Apache Kafka is a powerful scalable fault-tolerant distributed streaming platform. In this Kafka Connect Tutorial we will study how to import data from external systems into Apache Kafka topics and also to export data from Kafka topics into external systems we have another component of the Apache Kafka project that is Kafka Connect. Ad Learn Apache Kafka from Confluent the Company Founded by Kafkas Original Developers.

It will also cover how to declare the. There are connectors that help to move huge data sets into and out of the Kafka system. Kafka Connect is an integral component of an ETL pipeline when.

Build Apache Kafka Knowledge and Expertise in the Field of Event Streaming. In this tutorial we will use docker-compose MySQL 8 as examples to demonstrate Kafka Connector by using MySQL as the data source. We can use existing connector implementations for common data sources and sinks or implement our own connectors.

In this tutorial well show you how to connect Kafka to Elasticsearch with minimum effort using the Elasticsearch sink connector. Apache Kafka Connector Connectors are the components of Kafka that could be setup to listen the changes that happen to a data source like a file or database and pull in those changes automatically. At the end.

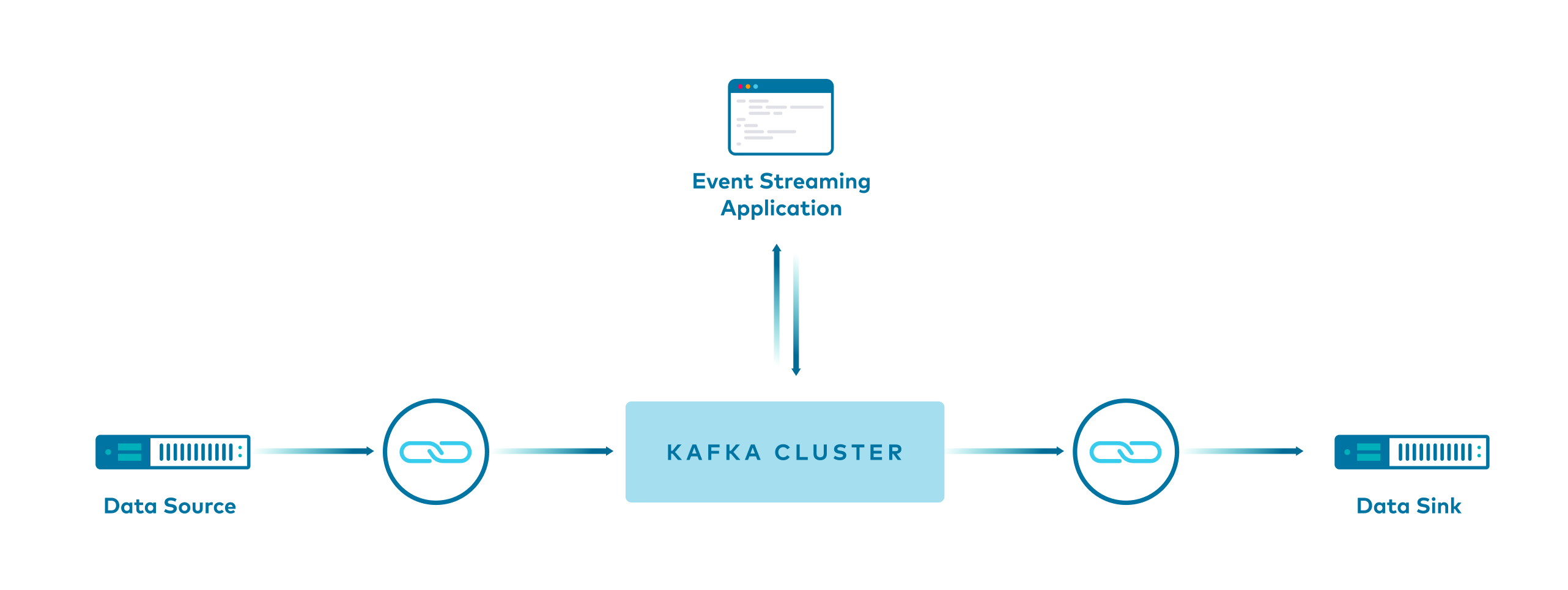

Kafka Connect is a tool for scalably and reliably streaming data between Apache Kafka and other systems using source and sink connectors. Kafka Connect supports JSON documents with embedded schemas. This feature is currently in preview.

This site features full code examples using Kafka Kafka Streams and ksqlDB to demonstrate real use cases. This tutorial is mainly based on the tutorial written on Kafka Connect Tutorial on DockerHowever the original tutorial is out-dated that it just wont work if you followed it step by step. Ad Learn Apache Kafka from Confluent the Company Founded by Kafkas Original Developers.

Kafka Connectors are ready-to-use components which can help us to import data from external systems into Kafka topics and export data from Kafka topics into external systems. Moving Data In and Out of Kafka with Kafka Connect This tutorial provides a hands-on look at how you can move data into and out of Apache Kafka without writing a single line of code. In this Kafka Connect S3 tutorial lets demo multiple Kafka S3 integration examples.

While these connectors are not meant for production use they demonstrate an end-to-end Kafka Connect scenario where Azure Event Hubs acts as a Kafka broker. However there is much more to learn about Kafka Connect. Released as part of Apache Kafka 09 Kafka Connect is a tool for scalably and reliably streaming data between Apache Kafka and other data systems.

Apache Kafka Connector Example Import Data into Kafka. So lets start Kafka Connect. Kafka Connect is the integration API for Apache Kafka.

Kafka Connect is focused on streaming data to and from Kafka making it simpler for you to write high quality reliable and high performance connector plugins. All the tutorials can be run locally or with Confluent Cloud Apache Kafka as a fully managed cloud service. In this Kafka Connector Example we shall deal with a simple use case.

The Sources in Kafka Connect are responsible for ingesting the data from other system into Kafka while the Sinks are responsible for writing the data to other systemsNote that another new feature has been also introduced in Apache Kafka 09 is Kafka Streams. Well cover writing to S3 from one topic and also multiple Kafka source topics. Kafka Connect exposes a REST API to manage Debezium connectors.

It enables you to stream data from source systems such databases message queues SaaS platforms and flat files into Kafka and from Kafka to target systems. Also well see an example of an S3 Kafka source connector reading files from S3 and writing to Kafka will be shown. Build Apache Kafka Knowledge and Expertise in the Field of Event Streaming.

It is helpful to review the concepts for Kafka Connect in tandem with running the steps in this guide to gain a deeper understanding. It is an open-source component and framework to get Kafka connected with the external systems. There are different ways to set the key correctly and these tutorials will show you how.

It is a client library for processing and analyzing data stored in Kafka. A source connector collects data from a systemSource systems can be entire. This is a tutorial that shows how to set up and use Kafka Connect on Kubernetes using Strimzi with the help of an example.

Building A Sql Database Audit System Using Kafka Mongodb And Maxwell S Daemon In 2021 Data Capture Audit Sql

Pipelining With Kafka Connect And Kafka Streams Confluent Documentation

Apache Kafka Connect A Complete Guide 2019 Dataflair

Integrasi Data Dengan Kafka Bagian 2 Jenius Co Create